Our paper titled “Training-Free Layer Selection for Partial Fine-Tuning of Language Models” has been accepted for publication in the Information Sciences journal (Elsevier, Q1 – Computer Science, Information Systems, Percentage Rank: 91.9%, Impact Factor: 6.8, H-index: 243)

Aldrin Kabya Biswas, Md Fahim, Md Tahmid Hasan Fuad, Akm Moshiur Rahman Mazumder, Amin Ahsan Ali, AKM Mahbubur Rahman

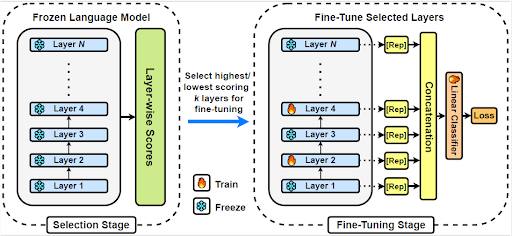

In this work, the authors propose a training-free, layer-wise partial fine-tuning approach for language models using cosine similarity between representative tokens across layers  . The method identifies inter-layer relationships through a single forward pass and selectively fine-tunes layers while keeping others frozen.

. The method identifies inter-layer relationships through a single forward pass and selectively fine-tunes layers while keeping others frozen.