Two papers by CCDS Senior RAs have been accepted at the prestigious IEEE 23rd International Conference on Machine Learning and Applications (ICMLA), USA!

/in Publications/by aburifat![]() Huge Congratulations to Our Senior RAs!

Huge Congratulations to Our Senior RAs! ![]()

We are thrilled to announce that two papers by CCDS Senior RAs Nabarun Halder, Jahanggir Hossain Setu, Tanjina Piash Proma, and Syed Tangim Pasha have been accepted at the prestigious IEEE 23rd International Conference on Machine Learning and Applications (ICMLA), USA! ![]()

![]()

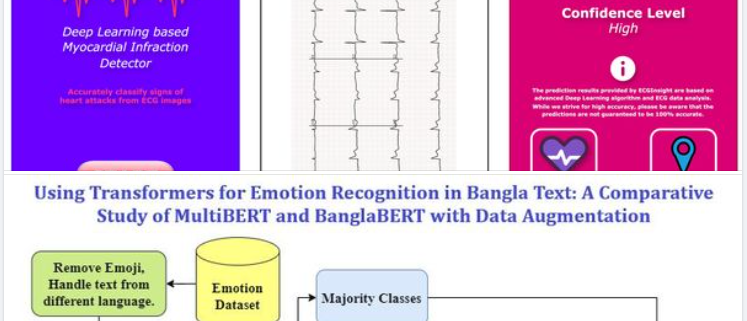

Accepted Titles:

“ECGInsight: A Web Application-Based Approach to Myocardial Infarction Detection From ECG Image Reports Utilizing ResNet”

and

“Using Transformers for Emotion Recognition in Bangla Text: A Comparative Study of MultiBERT and BanglaBERT with Data Augmentation”

With an impressive acceptance rate of just 24.3% this year, this is an excellent achievement. The hard work and dedication of our talented RAs, under the supervision of Dr. Ashraful Islam, have truly paid off.

Congratulations to the team for this remarkable success! ![]()

![]() We are incredibly proud of you all and excited to see your contributions making waves in the world of machine learning!

We are incredibly proud of you all and excited to see your contributions making waves in the world of machine learning! ![]()

![]()

Big congrats to our CCDS RA Farhan Israk Soumik for starting his fully funded PHD journey in Computer Science program at Southern Illinois University, Carbondale.

/in News/by aburifatHe is currently working as Graduate Assistant under Dr. Henry Hexmoor.

His research topic is focused on applying AI for ensuring security of Video Conferencing softwares like Google zamboard, Whiteboard etc. This is a NSF funded project.

He joined the CCDS around 2022 after completing his bachelor ( in CSE) from RUET.

We wish him all the best for his future endeavors.

Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structure, by Sanborn et al.

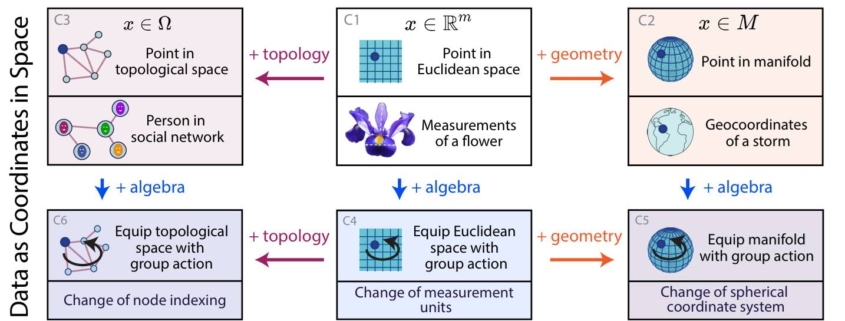

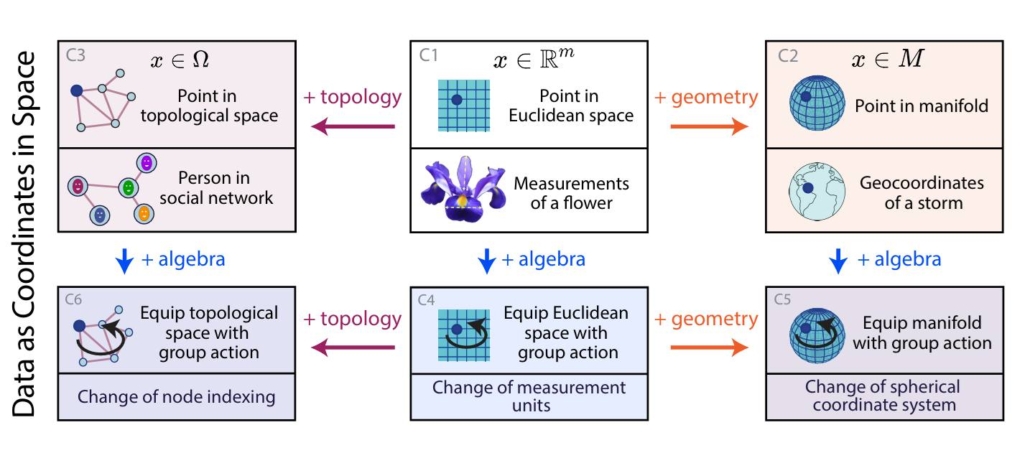

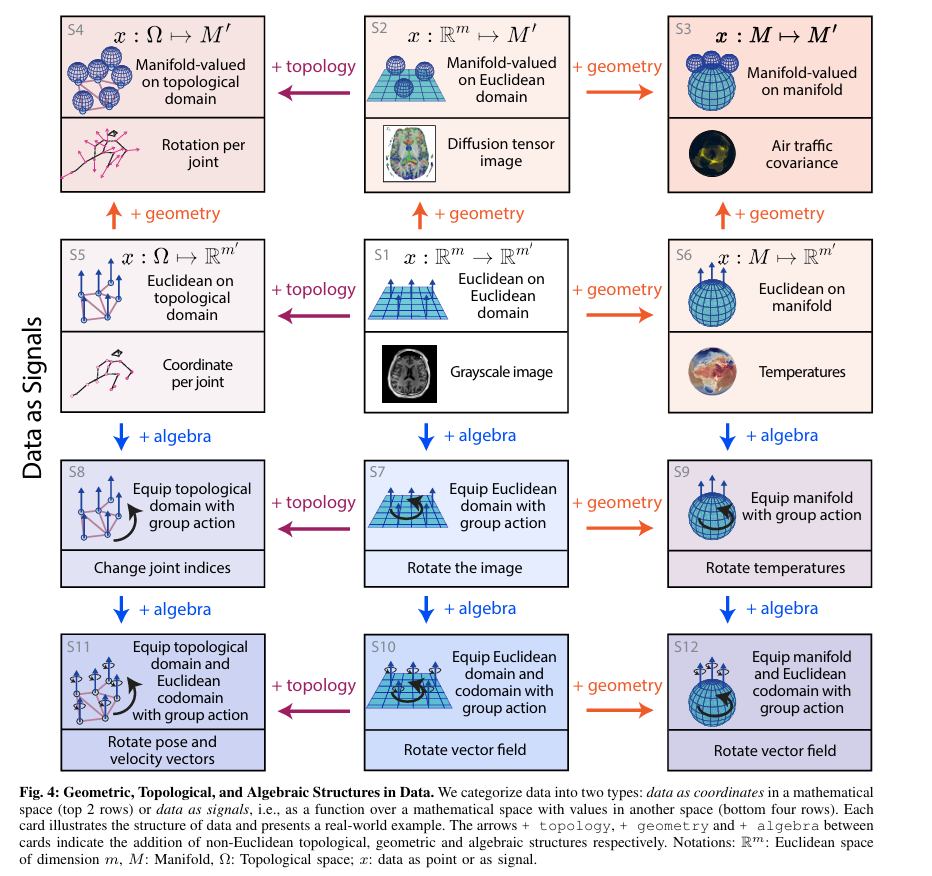

/in News/by aburifatThe two images below are from the article titled – Beyond Euclid: An Illustrated Guide to Modern Machine Learning with Geometric, Topological, and Algebraic Structure, by Sanborn et al. (https://arxiv.org/abs/2407.09468v1)

The paper talk about how “the mathematics of topology, geometry and algebra provide a conceptual framework to categorize the nature of data found in machine learning.” The two figures, present a “graphical taxonomy, to categorize the structures of data.” The article the authors discuss two types of data that we generally encounter: “either data as coordinates in space—for example the coordinate of the position of an object in a 2D space; or data as signals over a space—for example, an image is a 3D (RGB) signal defined over a 2D space. In each case, the space can either be a Euclidean space or it can be equipped with topological, geometric and algebraic structures.”

The paper goes on to then “review a large and disparate body of literature of non-Euclidean generalizations of algorithms classically defined for data residing in Euclidean spaces.” The algorithms presented assume that certain topological, algebraic, or geometric structures of the data / problem are known. However, it does not go into discussion on methods where such structures are not known. For example, methods that fall into the category of topological data analysis, metric learning, or group learning are not covered.

First undergrad group complete their senior project from the HCI wing.

/in News/by aburifatCongratulations to them ![]()

It should be mentioned that, during this project they published 2 conference papers and another article is in preparation.

CCDS student James selected for the CERN Summer Student Program 2024

/in News/by aburifatThe Centre for Computational and Data Sciences (CCDS), Independent University, Bangladesh (IUB), is proud to announce that James Peter Gomes, a dedicated undergraduate research student at CCDS and a Physics (Honors) major student in the Department of Physical Sciences, was selected earlier this year to participate in the prestigious CERN Summer Student Program 2024. He was the only Bangladeshi student amongst the 300 selected out of around 10,500 applicants worldwide. The fully funded 8 weeklong program was held at CERN’s Meyrin site in Geneva, Switzerland from 24th June to 16th August 2024.

![]() For those who are not aware, CERN, the European Organization for Nuclear Research, stands as one of the world’s foremost centers for scientific inquiry and collaboration. Located near Geneva, Switzerland, CERN is renowned for its groundbreaking research in particle physics and its role in advancing our understanding of the fundamental forces and particles that govern the universe. Participation in the CERN Summer Student Program provides aspiring physicists like James with a unique opportunity to immerse themselves in this vibrant scientific community, engage in cutting-edge research projects, and collaborate with leading experts in the field.

For those who are not aware, CERN, the European Organization for Nuclear Research, stands as one of the world’s foremost centers for scientific inquiry and collaboration. Located near Geneva, Switzerland, CERN is renowned for its groundbreaking research in particle physics and its role in advancing our understanding of the fundamental forces and particles that govern the universe. Participation in the CERN Summer Student Program provides aspiring physicists like James with a unique opportunity to immerse themselves in this vibrant scientific community, engage in cutting-edge research projects, and collaborate with leading experts in the field.

![]() Here’s what James has to say about his experience.

Here’s what James has to say about his experience.

“During the internship, I worked as an associated personnel of the LHCb (Large Hadron Collider beauty) Detector Group (EP-LBD). This experimental collaboration mostly focuses on CP violation in nature, which distinguishes between particle and antiparticle in nature. This asymmetry is important for Cosmological observations. This very minute asymmetry requires very good statistics from the last dataset obtained from the collisions in the Large Hadron Collider (LHC) via the highly efficient detectors in the experimental setup. One also needs a good understanding of the detectors which is studied via simulations. My responsibilities included testing and exploring the integration of the GPU-based simulation prototype, AdePT (Accelerated demonstrator of electromagnetic Particle Transport), into the Gaussino simulation framework for the LHCb experiment. This initiative aimed at enhancement of the efficiency and accuracy of particle physics simulations, thereby advancing our understanding of fundamental particles and their interactions. My supervisors were incredibly supportive and patient throughout this project. They generously shared their expertise and guidance, always willing to answer my questions and clarify my doubts. Their mentorship was invaluable, as I was still learning to navigate the tools required for this work.

Furthermore, I participated in a series of lectures on physics, from the Standard Model to Beyond Standard Model to Quantum Gravity, delivered by CERN personnel, active researchers, and distinguished professors like David Tong, throughout the weekdays from July 2nd to August 2nd.

One of the program’s greatest benefits was the comprehensive support it provided to participants. We enjoyed CERN’s health insurance, a full travel allowance, and a daily stipend. Additionally, we had access to world-class facilities like laboratories, libraries, and computing resources. These resources were instrumental in fostering collaboration and advancing our research.

Quite interestingly, almost a third of the summer students were from Computer Science and Engineering background. In fact, I was one of the only 4 physics students out of the 11 summer students in the simulation team and the rest were from CSE relevant background. It seemed like my prior knowledge of specialized tools like ROOT, Pythia8, GEANT4, and FeynCalc proved invaluable in securing this internship. These skills are essential for the computing-intensive projects that CERN undertakes. CERN summer internship program, being computing-heavy, offers a valuable opportunity for students with strong programming and Linux skills.

I’m deeply grateful to my supervisor, Dr. Arshad Momen, for his invaluable guidance throughout my time at IUB. His patience and mentorship have been instrumental in helping me discover my passion for physics and choose the right path. I first met Arshad Sir in my first semester and have been fortunate to learn from his expertise ever since. It was thanks to his encouragement that I learned about the CERN Summer Student Program and developed the skills necessary to participate. I’m truly thankful for his support.”

One paper has been accepted in ECAI 2024

/in Publications/by aburifatCongratulations to our senior project student Fahim Ahmed and research assistant Md Fahim for getting their paper accepted into the core rank A conference, European Conference on AI (ECAI) https://www.ecai2024.eu/ . The acceptance rate was very competitive (24%) this time for ECAI 2024. The title of the paper is, “Improving the Performance of Transformer-based Models Over Classical Baselines in Multiple Transliterated Languages”.

Here is a short description of the paper:

Online discourse, by its very nature, is rife with transliterated text along with code-mixing and code-switching. Transliteration is heavily featured due to the ease of inputting romanized text with standard keyboards over native scripts. Due to its ubiquity, it is a critical area of study to ensure NLP models perform well in real-world scenarios.

In this paper, we analyze the performance of various language model’s performance on classification of romanized/transliterated social media text. We chose the tasks of sentiment analysis and offensive language identification. We carried out experiments for three different languages, namely Bangla, Hindi, and Arabic (for six datasets). To our surprise, we discovered across multiple datasets that the classical machine learning methods (Logistic Regression (LR), Support Vector Machine (SVM), Random Forest (RF), and XGBoost) perform very competitively with fine-tuned transformer-based mono / multilingual language models (BanglishBERT, HingBERT, and DarijaBERT, XLM-RoBERTa, mBERT, and mDeBERTa), tiny LLMs (Gemma-2B, and TinyLLaMa) and ChatGPT for classification tasks in transliterated text. Additionally, we investigated various mitigation strategies such as translation and augmentation via the use of ChatGPT, as well as Masked Language Modelling to dataset-specific pretraining for language models. Depending on the dataset and language, employing those mitigation techniques yields a 2-3% further improvement in accuracy and macro-F1 above baseline.

We demonstrate TF-IDF and BoW-based classifiers achieve performance within around 3% of fine-tuned LMs and thus could thus be considered as a strong baseline for transliterated text-based NLP tasks.

5 papers from CCDS has been accepted in ICPR 2024

/in Publications/by aburifat1.

Dehan, Farhan Noor; Fahim, Md; Rahman, AKM Mahabubur; Amin, M Ashraful; Ali, Amin Ahsan

TinyLLM Efficacy in Low-Resource Language

In: 27th International Conference on Pattern Recognition, ICPR IEEE, KolKata, India, 2024.

2.

Sultana, Faria; Fuad, Md Tahmid Hasan; Fahim, Md; Rahman, Rahat Rizvi; Hossain, Meheraj; Amin, M Ashraful; Rahman, AKM Mahabubur; Ali, Amin Ahsan

How Good are LM and LLMs in Bangla Newspaper Article Summarization?

In: 27th International Conference on Pattern Recognition, ICPR IEEE, KolKata, India, 2024.

3.

Kim, Minha; Bhaumik, Kishor; Ali, Amin Ahsan; Woo, Simon

MIXAD: Memory-Induced Explainable Time Series Anomaly Detection

In: 27th International Conference on Pattern Recognition, ICPR IEEE, KolKata, India, 2024.

4.

Bhaumik, Kishor; Kimb, Minha; Niloy, Fahim Faisal; Ali, Amin Ahsan; Woo, Simon

SSMT: Few-Shot Traffic Forecasting with Single Source Meta-Transfer Learning

In: IEEE Int’l Conf on Image Processing, ICPR IEEE, Abu Dhabi, 2024.

5.

Hossain, Mir Sazzat; Rahman, AKM Mahbubur; Amin, Md. Ashraful; Ali, Amin Ahsan

Lightweight Recurrent Neural Network for Image Super-resolution

In: IEEE Int’l Conf on Image Processing, IEEE IEEE, Abu Dhabi, 2024.

Self supervised Model for Domain Adaptation in Build Up Area Categorization

/in Uncategorized/by aburifatThe urban environment is a complex system comprising various elements such as buildings, roads, vegetation, and water bodies. Classifying urban cities from satellite images or images captured by UAVs is an important task for urban planning, disaster management, and environmental monitoring. Urban environments differ across various cities of the world, and existing models for urban classification struggle to adapt to these changes. This project aims to develop an adaptive model for classifying urban cities from satellite images. This model will have the capability to generalize across different urban environments and adapt to the changing urban environment in real time. The model will be trained on a large dataset of satellite images of urban cities from different parts of the world. In inference or test time, the parameters of the model will be updated based on the changes in the different urban environments it has been deployed. This work will be built on recent work on Test time domain adaptation methods and our earlier research on the categorization of urban buildup [1], land usage, and land cover [2,3].

Related Works:

- Cheng, Q.; Zaber, M.; Rahman, A.M.; Zhang, H.; Guo, Z.; Okabe, A.; Shibasaki, R. Understanding the Urban Environment from Satellite Images with New Classification Method—Focusing on Formality and Informality. Sustainability 2022, 14, 4336. https://doi.org/10.3390/su14074336

- Rahman, A.K.M.M.; Zaber, M.; Cheng, Q.; Nayem, A.B.S.; Sarker, A.; Paul, O.; Shibasaki, R. Applying State-of-the-Art Deep-Learning Methods to Classify Urban Cities of the Developing World. Sensors 2021, 21, 7469. https://doi.org/10.3390/s21227469

- Niloy, Fahim Faisal, et al. “Attention toward neighbors: A context aware framework for high resolution image segmentation.” 2021 IEEE International Conference on Image Processing (ICIP). IEEE, 2021.

IP:

Co-IP:

CONTACT

Center for Computational & Data Sciences (CCDS), Independent University, Bangladesh (IUB).

Plot 16, Aftabuddin Ahmed Road, Block B, Bashnudhara RA, Dhaka 1229, Bangladesh.

- Email: ccds@iub.edu.bd

- Phone: +88 01885 570 597