Explainable Hate Speech Detection: ICML 2023 Workshop Acceptance

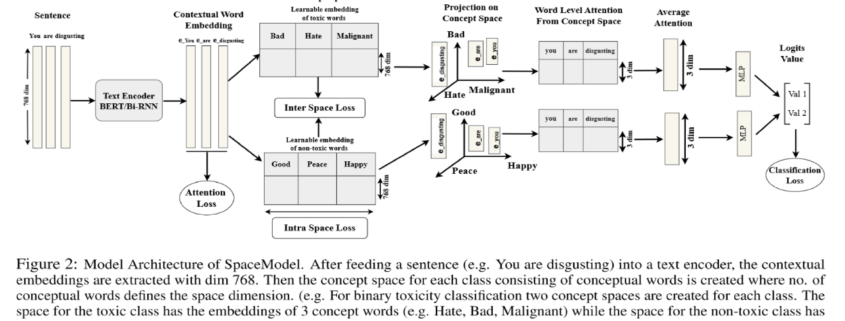

Recently, Md Fahim, RA of CCDS with co-authors from UToronto, IUT and Fordham University has a paper accepted in AI and HCI workshop of ICML 2023. The paper proposes an interpretability and explainability oriented model to detect hate speech utilizing the pre-trained large language models. It creates dynamic class specific conceptual subspaces from which class specific attention is obtained by projecting the contextual embedding onto those spaces. These attentions provide better explainability of the detection task.

Paper Link: HateXplain2.0: An Explainable Hate Speech Detection Framework Utilizing Subjective Projection from Contextual Knowledge Space to Disjoint Concept Space