Crop Identification and Yield Estimation using SAR Data

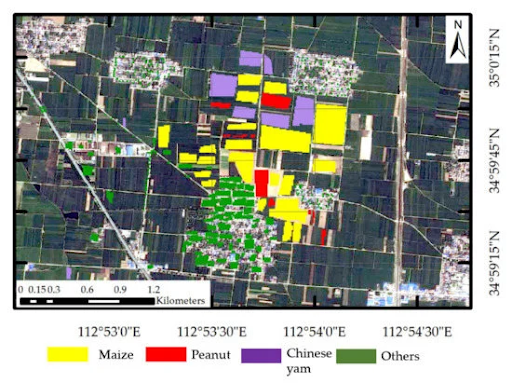

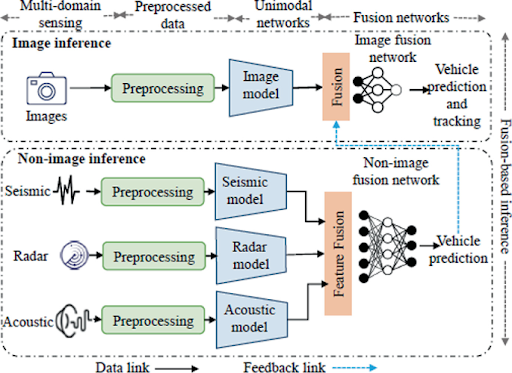

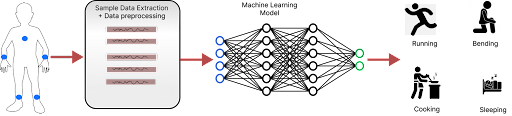

Accurate crop identification and yield estimation are crucial for policymakers to develop effective agricultural policies, allocate resources efficiently, and support farmers in adopting suitable technologies. However, optical remote sensing methods, commonly used for crop identification and yield estimation, face challenges due to cloud cover and adverse weather conditions. King et al. (2013) [4] estimated that approximately 67 percent of the Earth’s surface is often obscured by clouds, making it difficult to obtain high-quality optical remote sensing data. Additionally, humid and semi-humid climate zones with abundant water sources pose further challenges for remote sensing in agriculture. To overcome these limitations, this project aims to utilize Synthetic Aperture Radar (SAR) data for crop identification and yield estimation. It enables continuous data collection regardless of light and weather conditions by using microwaves that can penetrate clouds. As SAR is sensitive to both the dielectric and geometrical characteristics of plants, it captures information below the vegetation canopy cover and provides insights into crop structure and health. Furthermore, SAR provides flexibility in imaging parameters such as incident angles and polarization configurations, facilitating the extraction of diverse information about agricultural landscapes.

Related Works:

- D. Suchi, A. Menon, A. Malik, J. Hu and J. Gao, Crop Identification Based on Remote Sensing Data using Machine Learning Approaches for Fresno County, California, 2021 IEEE Seventh International Conference on Big Data Computing Service and Applications (BigDataService), Oxford, United Kingdom, 2021, pp. 115-124, doi: 10.1109/BigDataService52369.2021.00019.

- Liu, C., Chen, Z., Shao, Y., Chen, J., Hasi, T., & Pan, H. (2019). Research advances of SAR remote sensing for agriculture applications: A review. Journal of Integrative Agriculture, 18(3), 506-525.

- J. Singh, U. Devi, J. Hazra and S. Kalyanaraman, Crop-Identification Using Sentinel-1 and Sentinel-2 Data for Indian Region, IGARSS 2018 – 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 2018, pp. 5312-5314, doi: 10.1109/IGARSS.2018.8517356.

- King, M. D., Platnick, S., Menzel, W. P., Ackerman, S. A., & Hubanks, P. A. (2013). Spatial and Temporal Distribution of Clouds Observed by MODIS Onboard the Terra and Aqua Satellites. IEEE Transactions on Geoscience and Remote Sensing, 51(7), 3826–3852. doi:10.1109/tgrs.2012.2227333